The Dangers of AI "Hallucinations" in Real Estate: When Your AI Assistant Lies to a Client

AI assistants are rapidly becoming common in the Malaysian real estate industry for lead qualification, market updates, and client communication.

But there is a hidden danger most agents underestimate: AI Hallucinations.

A hallucination occurs when an AI confidently gives an answer that is completely false—not because it intends to lie, but because it fills in missing information with invented facts.

In real estate, this is not a harmless technical error. It can damage trust, cause financial loss, and create legal exposure for both the agent and the agency.

This article explains the risks and outlines the mandatory governance required to protect your business.

1. What Exactly is an AI Hallucination in Property?

A hallucination is when AI:

- Produces information that is factually untrue or invents details that don’t exist.

- Answers confidently even when the data is missing.

- Fills gaps based on "probable" guesses rather than verified facts.

In our industry, this is particularly dangerous because clients assume the AI is accurate, authoritative, and data-driven. They do not realize the AI is sometimes guessing.

2. How AI Hallucinates in Real Estate (Real Scenarios)

These are the most common hallucination risks for property agents:

| Risk Category | Example Scenario | Impact |

|---|---|---|

| A. Wrong Market Pricing | AI states: "Condo XYZ average price is RM950 PSF," when real transacted prices are RM720. | Destroys credibility; misleads sellers on valuation. |

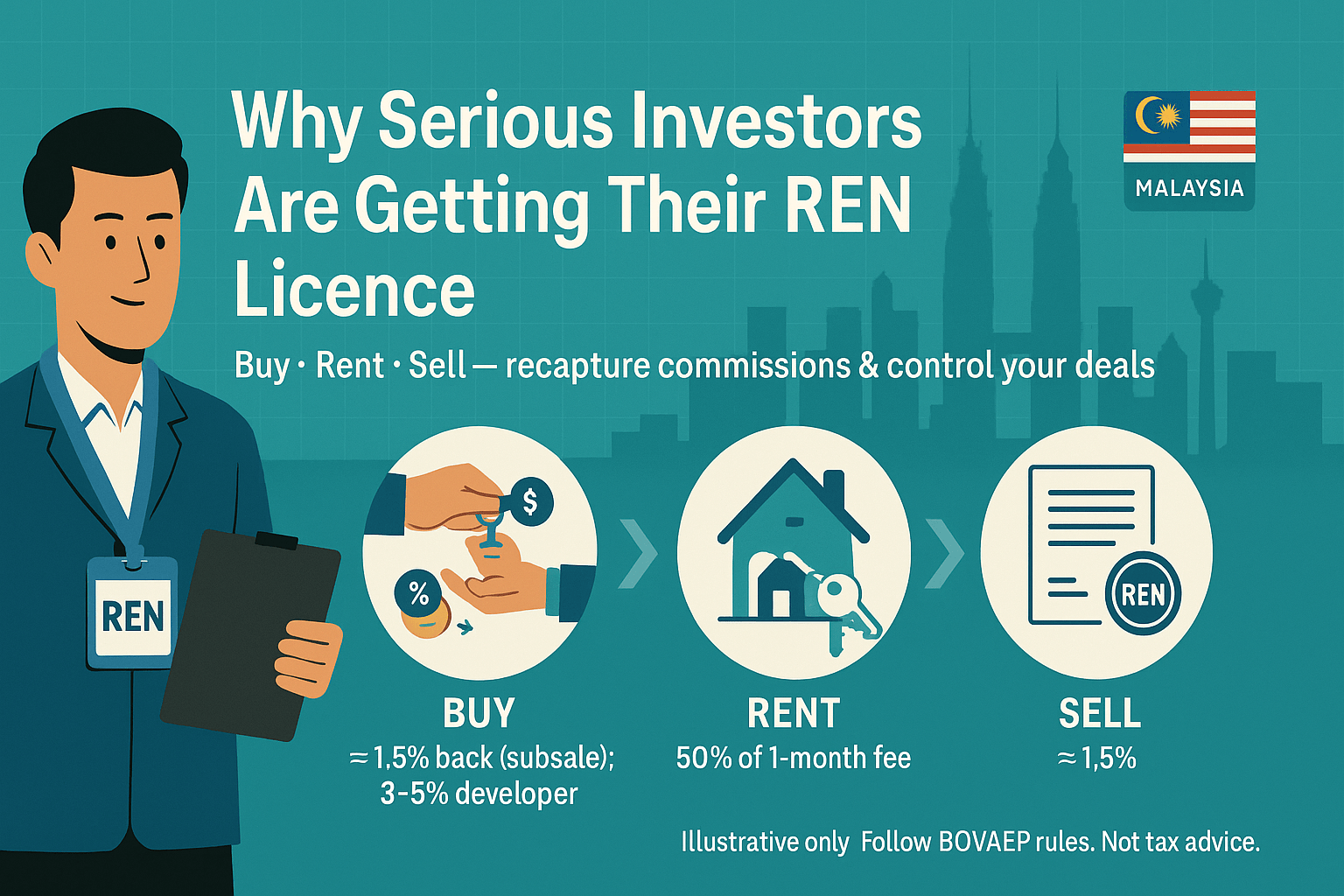

| B. Incorrect Financial Advice | AI gives outdated BLR/BR information, incorrect RPGT rules, or false stamp duty calculations. | Causes real financial loss; creates legal liability. |

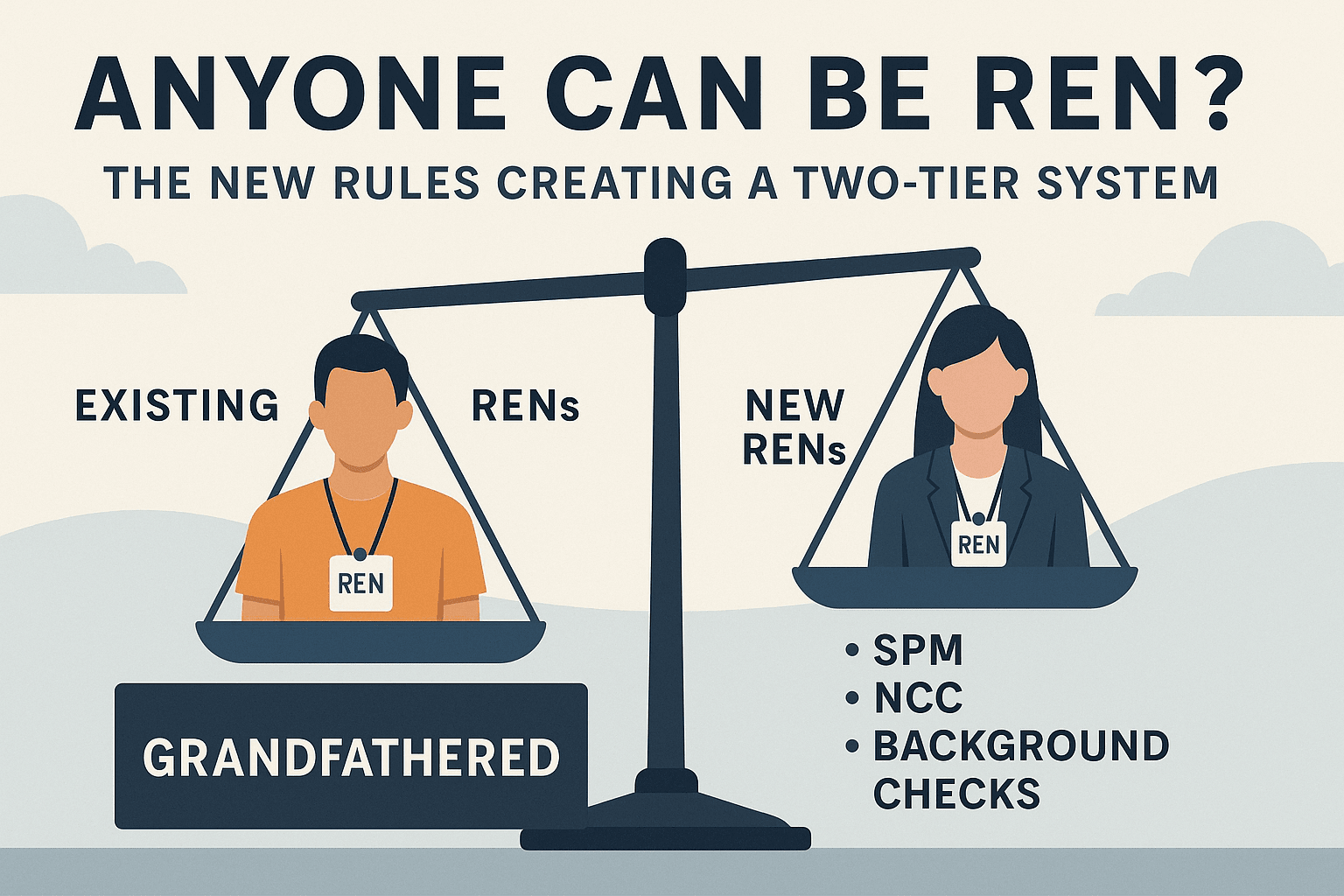

| C. Fake Legal Requirements | AI fabricates procedures for title transfer, developer compliance, or BOVAEP audit requirements. | Exposes the agency to regulatory scrutiny and client complaints. |

| D. Wrong Project Details | AI invents facilities, developer names, or maintenance fees for a new project listing. | Damages professionalism; creates misleading marketing material. |

| E. False Agency Statements | AI promises guarantees you never made or misrepresents your commission structure. | Leads to client disputes and compliance breaches. |

3. The Risk to Agents and Agencies

AI hallucinations create four major risks that undermine the foundations of a property business:

- 1. Reputational Damage: A single wrong, AI-generated answer destroys trust and makes you look careless.

- 2. Legal Liability: If your AI assistant gives incorrect financial or tax advice, you carry the responsibility, not the AI company. The agency faces claims, complaints, and regulatory scrutiny (PDPA, AMLA, BOVAEP).

- 3. Lost Deals: Misstating price, facilities, or loan requirements instantly erodes client confidence, causing them to walk away.

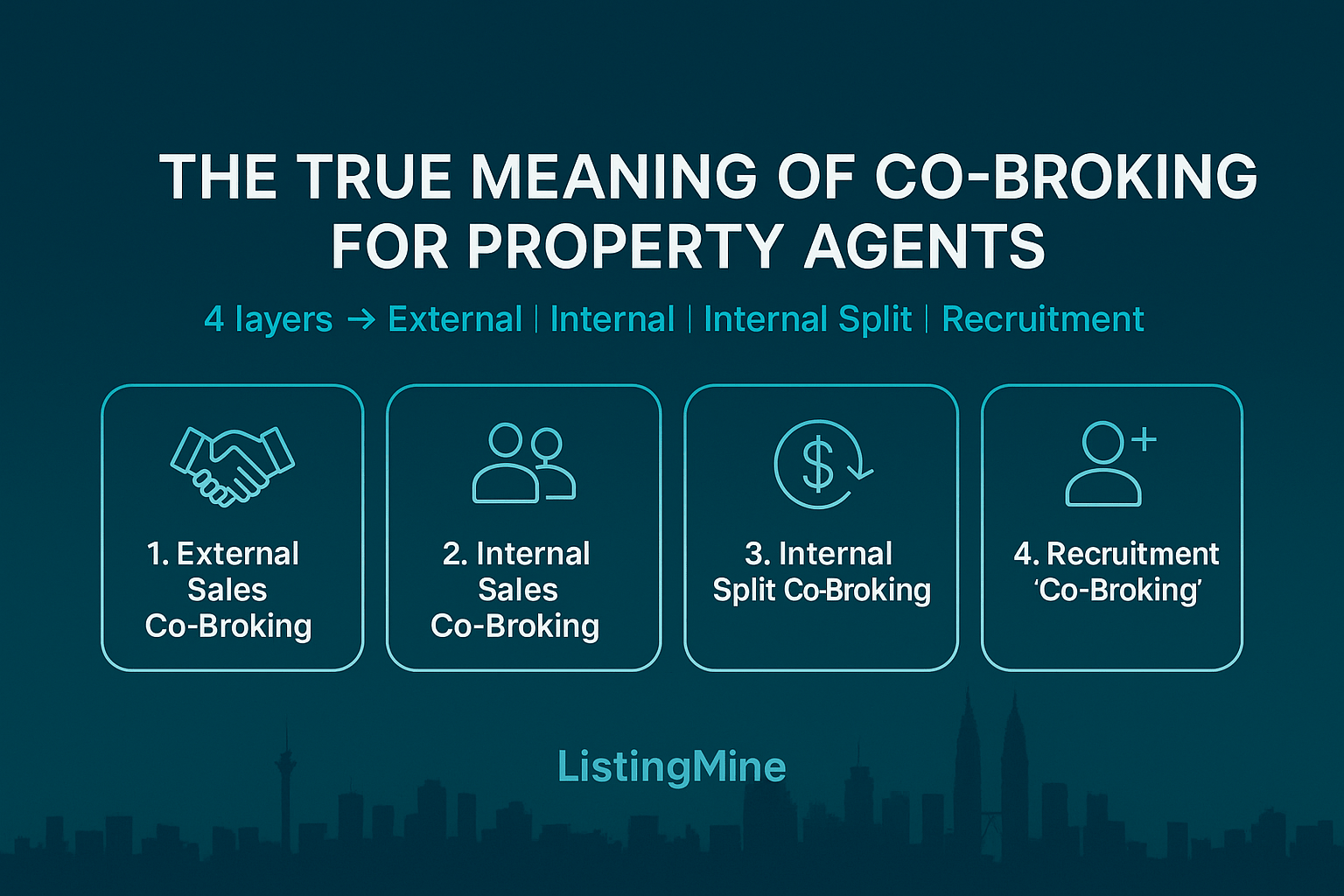

- 4. Internal Confusion: If teams use the AI's guesses as "truth," it creates inconsistent SOPs, wrong workflows, and operational conflict.

4. Mandatory Governance: How to Minimize Hallucinations

AI hallucinations can be reduced, but never eliminated. You must build strict guardrails.

| Governance Rule | Actionable Solution |

|---|---|

| A. Use a Custom GPT (The Guardrail) | Never rely on generic ChatGPT. Use a custom agent that is explicitly instructed to refuse to answer legal questions and refuse to invent numbers. |

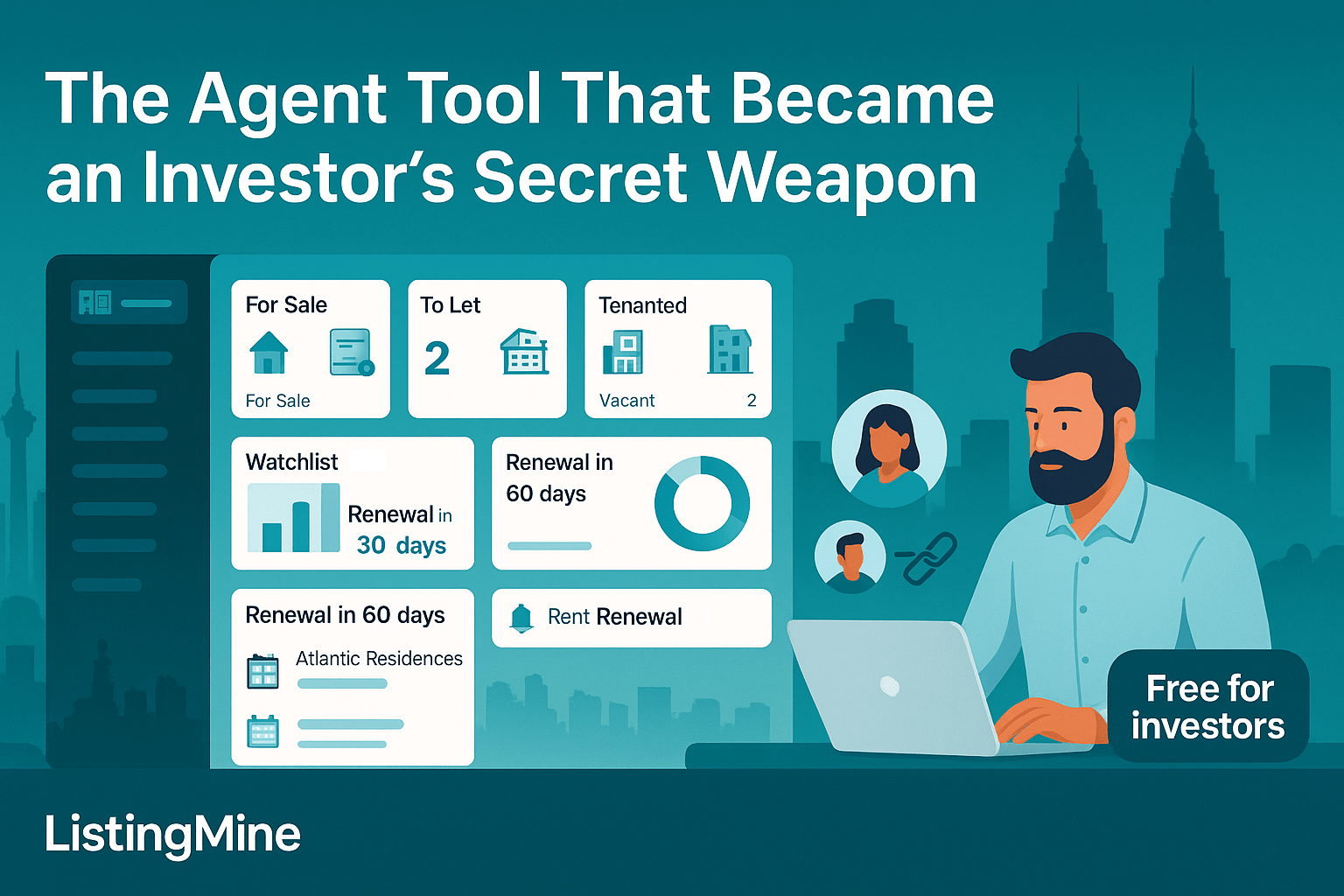

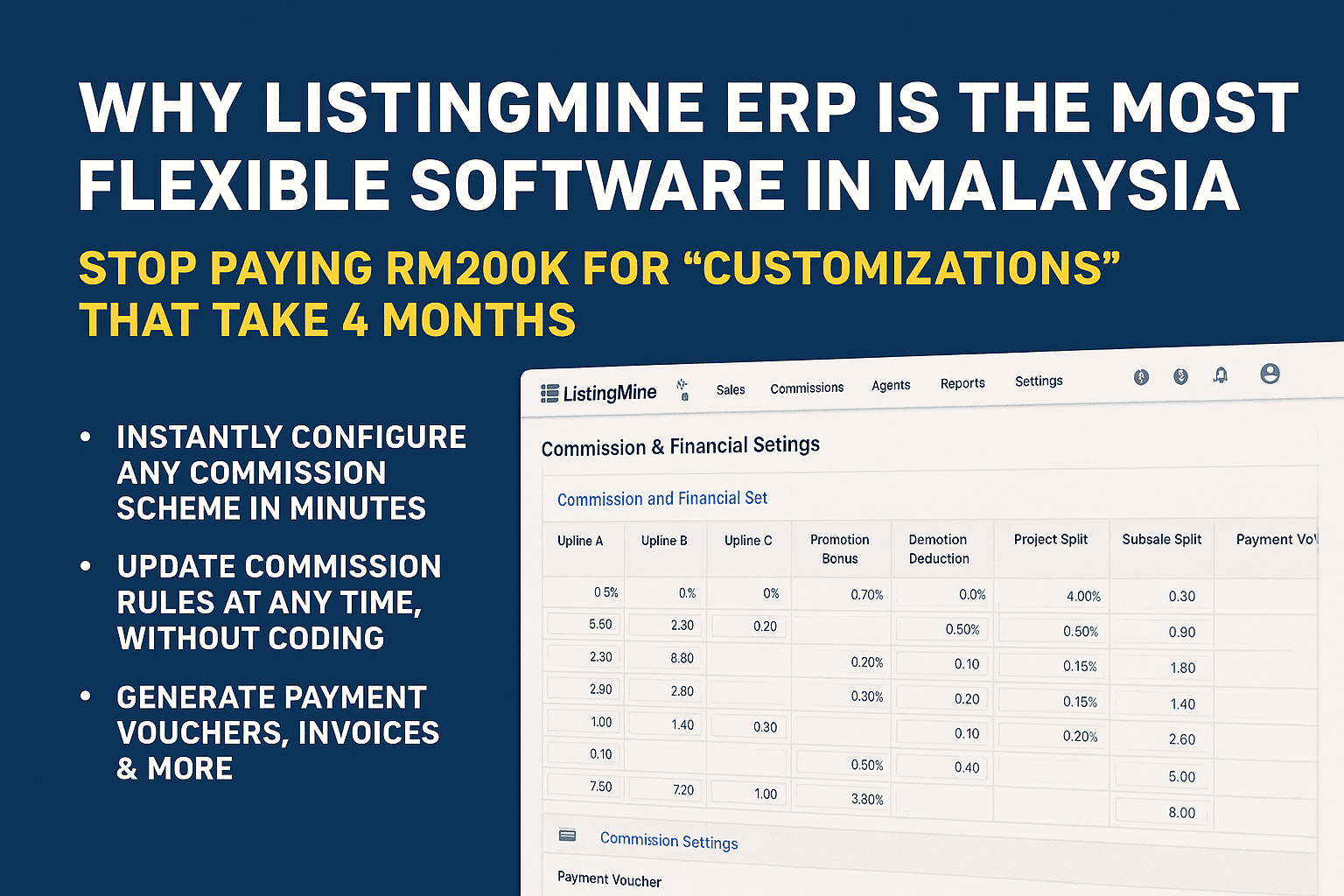

| B. Connect to Verified Data | Connect your GPT only to trusted sources: internal pricing sheets, ListingMine ERP data, ACN workflow documents, and commission structure SOPs. |

| C. Force Disclaimers | Every AI output involving pricing, legal matters, or loan advice must include: "This is an AI-generated estimate. Please verify details with a lawyer or banker." |

| D. Ban Declarative Financial Advice | Train your GPT to guide but not declare. Example Response: "I cannot provide legal opinion, but here is the general process… Please consult a lawyer or banker." |

| E. Implement Human Review | AI should never talk directly to clients without review. Workflow: AI Drafts → Agent Reviews → Agent Edits → Agent Sends. |

| F. Train the Team | Agents must understand that AI is a pattern machine, not a fact machine. They must verify every single output. |

5. The Real Danger: Agents Who Overtrust AI

The biggest problem is not the hallucination itself. It is the agent who thinks AI is always right.

These agents stop thinking, stop verifying, and outsource their professional judgment to a machine that sometimes makes things up. This is professionally dangerous and undermines the very need for the human advisor.

Conclusion: Use AI, But Never Blindly

AI makes average agents better by handling repetition. But it makes careless agents dangerous by providing false certainty.

In real estate—an industry built on trust, accuracy, and legal compliance—you cannot afford to let your AI assistant lie.

The future of real estate operations will combine: AI assistants + ACN workflows + ERP systems + verified data + human approval layers. This is the only path to safe, auditable automation.